Came across the United States Patent Application 20100295774 which has been filed by Craig Hennessey of Mirametrix. Essentially the system creates Regions Of Interest based on the HTML code (div-tags) to do an automatic mapping between gaze X&Y and the location of elements. This is done by accessing the Microsoft Document Object Model of an Intenet Explorer browser page to establish the "content tracker", a piece of software that generates the list of areas, their sizes and location on-screen which then are tagged with keywords (e.g logo, ad etc) This software will also keep track of several browser windows, their position and interaction state.

"A system for automatic mapping of eye-gaze data to hypermedia content utilizes high-level content-of-interest tags to identify regions of content-of-interest in hypermedia pages. User's computers are equipped with eye-gaze tracker equipment that is capable of determining the user's point-of-gaze on a displayed hypermedia page. A content tracker identifies the location of the content using the content-of-interest tags and a point-of-gaze to content-of-interest linker directly maps the user's point-of-gaze to the displayed content-of-interest. A visible-browser-identifier determines which browser window is being displayed and identifies which portions of the page are being displayed. Test data from plural users viewing test pages is collected, analyzed and reported."

To conclude the idea is to have multiple clients equipped with eye trackers that communicates with a server. The central machine coordinates studies and stores the gaze data from each session (in the cloud?). Overall a strategy that makes perfect sense if your differentiating factor is low-cost.

Tuesday, December 14, 2010

Monday, November 15, 2010

SMI RED500

Just days after the Tobii TX300 was launched SMI counters with the introduction of the the worlds first 500Hz remote binocular eye tracker. SMI seriously ramps up the competition in the high speed remote systems, surpassing the Tobii TX by a hefty 200Hz. The RED500 has a operating distance of 60-80cm with a 40x40 trackbox at 70cm with a reported accuracy of <0.4 degrees under typical (optimal?) settings. Real-world performance evaluation by independent third party remains to be seen. Not resting on their laurels SMI regains the king-of-the-hill position with an impressive achievement that demonstrates how competitive the field has become. See the technical specs for more information.

Just days after the Tobii TX300 was launched SMI counters with the introduction of the the worlds first 500Hz remote binocular eye tracker. SMI seriously ramps up the competition in the high speed remote systems, surpassing the Tobii TX by a hefty 200Hz. The RED500 has a operating distance of 60-80cm with a 40x40 trackbox at 70cm with a reported accuracy of <0.4 degrees under typical (optimal?) settings. Real-world performance evaluation by independent third party remains to be seen. Not resting on their laurels SMI regains the king-of-the-hill position with an impressive achievement that demonstrates how competitive the field has become. See the technical specs for more information.

3

comments

Labels:

eye tracker

Exploring the potential of context-sensitive CADe in screening mammography (Tourassi et al, 2010)

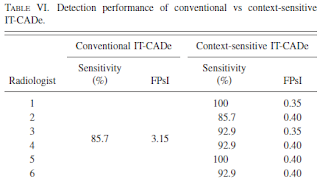

Georgia D. Tourassi, Maciej A. Mazurowski, and Brian P. Harrawood at Duke University Ravin Advanced Imaging Laboratories in collaboration with Elizabeth A. Krupinski presents a novel method of combining eye gaze data with Computer-Assisted Detection algorithms to improve detection rates for malignant masses in mammography. This contextualized method holds a potential for personalized diagnostic support.

Purpose: Conventional computer-assisted detection CADe systems in screening mammography provide the same decision support to all users. The aim of this study was to investigate the potential of a context-sensitive CADe system which provides decision support guided by each user’s focus of attention during visual search and reporting patterns for a specific case.

Methods: An observer study for the detection of malignant masses in screening mammograms was conducted in which six radiologists evaluated 20 mammograms while wearing an eye-tracking device. Eye-position data and diagnostic decisions were collected for each radiologist and case they reviewed. These cases were subsequently analyzed with an in-house knowledge-based CADe system using two different modes: conventional mode with a globally fixed decision threshold and context-sensitive mode with a location-variable decision threshold based on the radiologists’ eye dwelling data and reporting information.

Results: The CADe system operating in conventional mode had 85.7% per-image malignant mass sensitivity at 3.15 false positives per image FPsI . The same system operating in context-sensitive mode provided personalized decision support at 85.7%–100% sensitivity and 0.35–0.40 FPsI to all six radiologists. Furthermore, context-sensitive CADe system could improve the radiologists’ sensitivity and reduce their performance gap more effectively than conventional CADe.

Conclusions: Context-sensitive CADe support shows promise in delineating and reducing the radiologists’ perceptual and cognitive errors in the diagnostic interpretation of screening mammograms more effectively than conventional CADe.

Purpose: Conventional computer-assisted detection CADe systems in screening mammography provide the same decision support to all users. The aim of this study was to investigate the potential of a context-sensitive CADe system which provides decision support guided by each user’s focus of attention during visual search and reporting patterns for a specific case.

Methods: An observer study for the detection of malignant masses in screening mammograms was conducted in which six radiologists evaluated 20 mammograms while wearing an eye-tracking device. Eye-position data and diagnostic decisions were collected for each radiologist and case they reviewed. These cases were subsequently analyzed with an in-house knowledge-based CADe system using two different modes: conventional mode with a globally fixed decision threshold and context-sensitive mode with a location-variable decision threshold based on the radiologists’ eye dwelling data and reporting information.

Results: The CADe system operating in conventional mode had 85.7% per-image malignant mass sensitivity at 3.15 false positives per image FPsI . The same system operating in context-sensitive mode provided personalized decision support at 85.7%–100% sensitivity and 0.35–0.40 FPsI to all six radiologists. Furthermore, context-sensitive CADe system could improve the radiologists’ sensitivity and reduce their performance gap more effectively than conventional CADe.

0

comments

Labels:

radiology

Monday, November 8, 2010

GazeCom and SMI demonstrates automotive guidance system

"In order to determine the effectiveness of gaze guidance, within the project, SMI developed an experimental driving simulator with integrated eye tracking technology. A driving safety study in a city was set up and testing in that environment has shown that the number of accidents was significantly lower with gaze guidance than without, while most of the drivers didn’t consciously notice the guiding visual cues."

Christian Villwock, Director for Eye and Gaze Tracking Systems at SMI: “We have shown that visual performance can significantly be improved by gaze contingent gaze guidance. This introduces huge potential in applications where expert knowledge has to be transferred or safety is critical, for example for radiological image analysis.”

Within the GazeCom project, funded by the EU within the Future and Emerging Technologies (FET) program, the impact of gaze guidance on what is perceived and communicated effectively has been determined in a broad range of tasks of varying complexity. This included basic research in the understanding of visual perception and brain function up to the level where the guidance of gaze becomes feasible." (source)

1 comments

Labels:

mobility,

navigation

Wednesday, November 3, 2010

SMI Releases iViewX 2.5

Today SensoMotoric Instruments released a new version of their iViewX software which offers a number of fixes and software improvements. Download here.

Improvements

- NEW device: MEG 250

- RED5: improved tracking stability

- RED: improved pupil diameter calculation

- RED: improved distance measurement

- RED: improved 2 and 5 point calibration model

- file transfer server is installed with iView X now

- added configurable parallel port address

Fixes

- RED5 camera drop outs in 60Hz mode on Clevo Laptop

- initializes LPT_IO and PIODIO on startup correctly

- RED standalone mode can be used with all calibration methods via remote commands

- lateral offset in RED5 head position visualization

- HED: Use TimeStamp in [ms] as Scene Video Overlay

- improved rejection parameters for NNL Devices

- crash when using ET_CAL in standalone mode

- strange behaviour with ET_REM and eT_REM. Look up in command list is now case-insensitive.

- RED5: Default speed is 60Hz for RED and 250Hz for RED250

- and many more small fixes and improvements

Improvements

- NEW device: MEG 250

- RED5: improved tracking stability

- RED: improved pupil diameter calculation

- RED: improved distance measurement

- RED: improved 2 and 5 point calibration model

- file transfer server is installed with iView X now

- added configurable parallel port address

Fixes

- RED5 camera drop outs in 60Hz mode on Clevo Laptop

- initializes LPT_IO and PIODIO on startup correctly

- RED standalone mode can be used with all calibration methods via remote commands

- lateral offset in RED5 head position visualization

- HED: Use TimeStamp in [ms] as Scene Video Overlay

- improved rejection parameters for NNL Devices

- crash when using ET_CAL in standalone mode

- strange behaviour with ET_REM and eT_REM. Look up in command list is now case-insensitive.

- RED5: Default speed is 60Hz for RED and 250Hz for RED250

- and many more small fixes and improvements

0

comments

Labels:

SMI IView RED

Tuesday, November 2, 2010

Optimization and Dynamic Simulation of a Parallel Three Degree-of-Freedom Camera Orientation System (T. Villgrattner, 2010)

Moving a camera 2500 degrees per second is such an awesome accomplishment that I cannot help myself, shamelessly long quote from IEEE Spectrum:

German researchers have developed a robotic camera that mimics the motion of real eyes and even moves at superhuman speeds. The camera system can point in any direction and is also capable of imitating the fastest human eye movements, which can reach speeds of 500 degrees per second. But the system can also move faster than that, achieving more than 2500 degrees per second. It would make for very fast robot eyes. Led by Professor Heinz Ulbrich at the Institute of Applied Mechanics at theTechnische Universität München, a team of researchers has been working on superfast camera orientation systems that can reproduce the human gaze.

In many experiments in psychology, human-computer interaction, and other fields, researchers want to monitor precisely what subjects are looking at. Gaze can reveal not only what people are focusing their attention on but it also provides clues about their state of mind and intentions. Mobile systems to monitor gaze include eye-tracking software and head-mounted cameras. But they're not perfect; sometimes they just can't follow a person's fast eye movements, and sometimes they provide ambiguous gaze information.

In collaboration with their project partners from the Chair for Clinical Neuroscience, Ludwig-Maximilians Universität München, Dr. Erich Schneider, and Professor Thomas Brand the Munich team, which is supported in part by the CoTeSys Cluster, is developing a system to overcome those limitations. The system, propped on a person's head, uses a custom made eye-tracker to monitor the person's eye movements. It then precisely reproduces those movements using a superfast actuator-driven mechanism with yaw, pitch, and roll rotation, like a human eyeball. When the real eye move, the robot eye follows suit.

The engineers at the Institute of Applied Mechanics have been working on the camera orientation system over the past few years. Their previous designs had 2 degrees of freedom (DOF). Now researcher Thomas Villgrattner is presenting a system that improves on the earlier versions and features not 2 but 3 DOF. He explains that existing camera-orientation systems with 3 DOF that are fast and lightweight rely on model aircraft servo actuators. The main drawback of such actuators is that they can introduce delays and require gear boxes.

So Villgrattner sought a different approach. Because this is a head-mounted device, it has to be lightweight and inconspicuous -- you don't want it rattling and shaking on the subject's scalp. Which actuators to use? The solution consists of an elegant parallel system that uses ultrasonic piezo actuators. The piezos transmit their movement to a prismatic joint, which in turns drives small push rods attached to the camera frame. The rods have spherical joints on either end, and this kind of mechanism is known as a PSS, or prismatic, spherical, spherical, chain. It's a "quite nice mechanism," says Masaaki Kumagai, a mechanical engineering associate professor at Tohoku Gakuin University, in Miyagi, Japan, who was not involved in the project. "I can't believe they made such a high speed/acceleration mechanism using piezo actuators."

The advantage is that it can reach high speeds and accelerations with small actuators, which remain on a stationary base, so they don't add to the inertial mass of the moving parts. And the piezos also provide high forces at low speeds, so no gear box is needed. Villgrattner describes the device's mechanical design and kinematics and dynamics analysis in a paper titled "Optimization and Dynamic Simulation of a Parallel Three Degree-of-Freedom Camera Orientation System," presented at last month's IEEE/RSJ International Conference on Intelligent Robots and Systems.

The current prototype weighs in at just 100 grams. It was able to reproduce the fastest eye movements, known as saccades, and also perform movements much faster than what our eyes can do. The system, Villgrattner tells me, was mainly designed for a "head-mounted gaze-driven camera system," but he adds that it could also be used "for remote eye trackers, for eye related 'Wizard of Oz' tests, and as artificial eyes for humanoid robots." In particular, this last application -- eyes for humanoid robots -- appears quite promising, and the Munich team is already working on that. Current humanoid eyes are rather simple, typically just static cameras, and that's understandable given all the complexity in these machines. It would be cool to see robots with humanlike -- or super human -- gaze capabilities.

Below is a video of the camera-orientation system (the head-mount device is not shown). First, it moves the camera in all three single axes (vertical, horizontal, and longitudinal) with an amplitude of about 30 degrees. Next it moves simultaneously around all three axes with an amplitude of about 19 degrees. Then it performs fast movements around the vertical axis at 1000 degrees/second and also high dynamic movements around all axes. Finally, the system reproduces natural human eye movements based on data from an eye-tracking system." (source)

German researchers have developed a robotic camera that mimics the motion of real eyes and even moves at superhuman speeds. The camera system can point in any direction and is also capable of imitating the fastest human eye movements, which can reach speeds of 500 degrees per second. But the system can also move faster than that, achieving more than 2500 degrees per second. It would make for very fast robot eyes. Led by Professor Heinz Ulbrich at the Institute of Applied Mechanics at theTechnische Universität München, a team of researchers has been working on superfast camera orientation systems that can reproduce the human gaze.

In many experiments in psychology, human-computer interaction, and other fields, researchers want to monitor precisely what subjects are looking at. Gaze can reveal not only what people are focusing their attention on but it also provides clues about their state of mind and intentions. Mobile systems to monitor gaze include eye-tracking software and head-mounted cameras. But they're not perfect; sometimes they just can't follow a person's fast eye movements, and sometimes they provide ambiguous gaze information.

In collaboration with their project partners from the Chair for Clinical Neuroscience, Ludwig-Maximilians Universität München, Dr. Erich Schneider, and Professor Thomas Brand the Munich team, which is supported in part by the CoTeSys Cluster, is developing a system to overcome those limitations. The system, propped on a person's head, uses a custom made eye-tracker to monitor the person's eye movements. It then precisely reproduces those movements using a superfast actuator-driven mechanism with yaw, pitch, and roll rotation, like a human eyeball. When the real eye move, the robot eye follows suit.

The engineers at the Institute of Applied Mechanics have been working on the camera orientation system over the past few years. Their previous designs had 2 degrees of freedom (DOF). Now researcher Thomas Villgrattner is presenting a system that improves on the earlier versions and features not 2 but 3 DOF. He explains that existing camera-orientation systems with 3 DOF that are fast and lightweight rely on model aircraft servo actuators. The main drawback of such actuators is that they can introduce delays and require gear boxes.

So Villgrattner sought a different approach. Because this is a head-mounted device, it has to be lightweight and inconspicuous -- you don't want it rattling and shaking on the subject's scalp. Which actuators to use? The solution consists of an elegant parallel system that uses ultrasonic piezo actuators. The piezos transmit their movement to a prismatic joint, which in turns drives small push rods attached to the camera frame. The rods have spherical joints on either end, and this kind of mechanism is known as a PSS, or prismatic, spherical, spherical, chain. It's a "quite nice mechanism," says Masaaki Kumagai, a mechanical engineering associate professor at Tohoku Gakuin University, in Miyagi, Japan, who was not involved in the project. "I can't believe they made such a high speed/acceleration mechanism using piezo actuators."

The advantage is that it can reach high speeds and accelerations with small actuators, which remain on a stationary base, so they don't add to the inertial mass of the moving parts. And the piezos also provide high forces at low speeds, so no gear box is needed. Villgrattner describes the device's mechanical design and kinematics and dynamics analysis in a paper titled "Optimization and Dynamic Simulation of a Parallel Three Degree-of-Freedom Camera Orientation System," presented at last month's IEEE/RSJ International Conference on Intelligent Robots and Systems.

The current prototype weighs in at just 100 grams. It was able to reproduce the fastest eye movements, known as saccades, and also perform movements much faster than what our eyes can do. The system, Villgrattner tells me, was mainly designed for a "head-mounted gaze-driven camera system," but he adds that it could also be used "for remote eye trackers, for eye related 'Wizard of Oz' tests, and as artificial eyes for humanoid robots." In particular, this last application -- eyes for humanoid robots -- appears quite promising, and the Munich team is already working on that. Current humanoid eyes are rather simple, typically just static cameras, and that's understandable given all the complexity in these machines. It would be cool to see robots with humanlike -- or super human -- gaze capabilities.

Below is a video of the camera-orientation system (the head-mount device is not shown). First, it moves the camera in all three single axes (vertical, horizontal, and longitudinal) with an amplitude of about 30 degrees. Next it moves simultaneously around all three axes with an amplitude of about 19 degrees. Then it performs fast movements around the vertical axis at 1000 degrees/second and also high dynamic movements around all axes. Finally, the system reproduces natural human eye movements based on data from an eye-tracking system." (source)

0

comments

Labels:

inspiration,

robot,

technology

Monday, November 1, 2010

ScanMatch: A novel method for comparing fixation sequences (Cristino et al, 2010)

Using algorithms designed to compare DNA sequence in eye movement comparison. Radical, with MATLAB source code, fantastic! Appears to be noise tolerant and outperform traditional Levenshtein-distance.

Abstract

We present a novel approach to comparing saccadic eye movement sequences based on the Needleman–Wunsch algorithm used in bioinformatics to compare DNA sequences. In the proposed method, the saccade sequence is spatially and temporally binned and then recoded to create a sequence of letters that retains fixation location, time, and order information. The comparison of two letter sequences is made by maximizing the similarity score computed from a substitution matrix that provides the score for all letter pair substitutions and a penalty gap. The substitution matrix provides a meaningful link between each location coded by the individual letters. This link could be distance but could also encode any useful dimension, including perceptual or semantic space. We show, by using synthetic and behavioral data, the benefits of this method over existing methods. The ScanMatch toolbox for MATLAB is freely available online (www.scanmatch.co.uk).

Abstract

We present a novel approach to comparing saccadic eye movement sequences based on the Needleman–Wunsch algorithm used in bioinformatics to compare DNA sequences. In the proposed method, the saccade sequence is spatially and temporally binned and then recoded to create a sequence of letters that retains fixation location, time, and order information. The comparison of two letter sequences is made by maximizing the similarity score computed from a substitution matrix that provides the score for all letter pair substitutions and a penalty gap. The substitution matrix provides a meaningful link between each location coded by the individual letters. This link could be distance but could also encode any useful dimension, including perceptual or semantic space. We show, by using synthetic and behavioral data, the benefits of this method over existing methods. The ScanMatch toolbox for MATLAB is freely available online (www.scanmatch.co.uk).

- Filipe Cristino, Sebastiaan Mathôt, Jan Theeuwes, and Iain D. Gilchrist

ScanMatch: A novel method for comparing fixation sequences

Behav Res Methods 2010 42:692-700; doi:10.3758/BRM.42.3.692

Abstract Full Text (PDF) References

0

comments

Labels:

algorithm,

noisy gaze data,

open source

An improved algorithm for automatic detection of saccades in eye movement data and for calculating saccade parameters (Behrens et al, 2010)

Abstract

"This analysis of time series of eye movements is a saccade-detection algorithm that is based on an earlier algorithm. It achieves substantial improvements by using an adaptive-threshold model instead of fixed thresholds and using the eye-movement acceleration signal. This has four advantages: (1) Adaptive thresholds are calculated automatically from the preceding acceleration data for detecting the beginning of a saccade, and thresholds are modified during the saccade. (2) The monotonicity of the position signal during the saccade, together with the acceleration with respect to the thresholds, is used to reliably determine the end of the saccade. (3) This allows differentiation between saccades following the main-sequence and non-main-sequence saccades. (4) Artifacts of various kinds can be detected and eliminated. The algorithm is demonstrated by applying it to human eye movement data (obtained by EOG) recorded during driving a car. A second demonstration of the algorithm detects microsleep episodes in eye movement data."

"This analysis of time series of eye movements is a saccade-detection algorithm that is based on an earlier algorithm. It achieves substantial improvements by using an adaptive-threshold model instead of fixed thresholds and using the eye-movement acceleration signal. This has four advantages: (1) Adaptive thresholds are calculated automatically from the preceding acceleration data for detecting the beginning of a saccade, and thresholds are modified during the saccade. (2) The monotonicity of the position signal during the saccade, together with the acceleration with respect to the thresholds, is used to reliably determine the end of the saccade. (3) This allows differentiation between saccades following the main-sequence and non-main-sequence saccades. (4) Artifacts of various kinds can be detected and eliminated. The algorithm is demonstrated by applying it to human eye movement data (obtained by EOG) recorded during driving a car. A second demonstration of the algorithm detects microsleep episodes in eye movement data."

- F. Behrens, M. MacKeben, and W. Schröder-Preikschat

An improved algorithm for automatic detection of saccades in eye movement data and for calculating saccade parameters. Behav Res Methods 2010 42:701-708; doi:10.3758/BRM.42.3.701

Abstract Full Text (PDF References

0

comments

Labels:

algorithm

Thursday, October 28, 2010

Gaze Tracker 2.0 Preview

On my 32nd birthday I'd like to celebrate by sharing this video highlighting some of the features in the latest version of the GT2.0 that I've been working on with Javier San Agustin and the GT forum. Open source eye tracking have never looked better. Enjoy!

HD video available (click 360p and select 720p)

HD video available (click 360p and select 720p)

3

comments

Labels:

gazetracker,

ITU,

low cost,

open source

Friday, October 1, 2010

Subscribe to:

Posts (Atom)